Intel is working on a rival to Nvidia’s DLSS 3. Intel and researchers from the University of California published a paper detailing the tech, currently dubbed ExtraSS, at Siggraph Asia 2023 (spotted by Wccftech). It accomplishes the same goal as DLSS 3 by generating new frames to improve performance. Unlike DLSS 3, however, ExtraSS uses frame extrapolation, not frame interpolation.

That latter method is how DLSS 3 (and AMD’s FSR 3) works. It takes two sequential frames and compares them to generate a frame in-between. This naturally means you’re playing on a slight delay, as the tech needs both the current and next frame to do its dirty work. Intel is proposing a technique that uses extrapolation, where it uses data only from previous frames to predict the next frame. That gets rid of the latency issue currently present in DLSS 3.

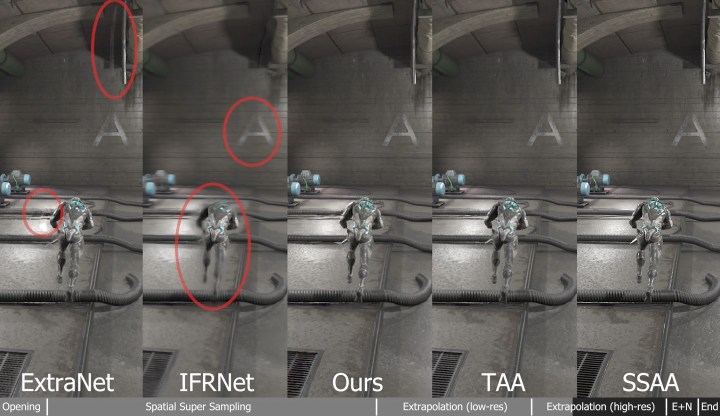

Extrapolation isn’t free of problems, which is probably why we haven’t seen it applied in games yet. The researchers point out the disocclusion issue with extrapolation. If your character is blocking a certain part of a scene, and they move, suddenly that part of the scene is viewable. Because extrapolation is essentially predicting future frames, it doesn’t have details on what your character was blocking. This creates disocclusion artifacts, which looks like a shimmering ghost following around moving objects. But the researchers say they’re able to solve this problem.

Or, in the researchers own words: “Our method proposes a new warping method with a lightweight flow model to extrapolate frames with better qualities [compared] to the previous frame generation methods and less latency compared to interpolation-based methods.”

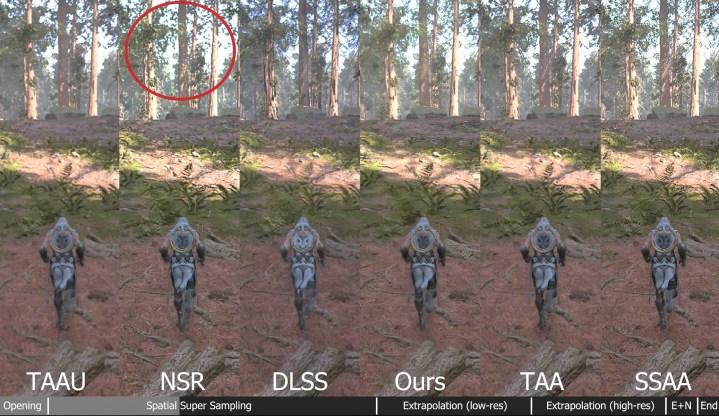

In addition to frame extrapolation, the framework the researchers show includes supersampling, similar to the base versions of Nvidia DLSS and AMD FSR. The researchers say the implementation shows comparable quality, which it highlighted in a brief demo video.

The researchers say they’re able to achieve high-quality results by utilizing the geometry buffer (or G-buffer) for warping. As the paper states, “Our method still shows comparable results due to the good initialization provided by G-buffer guided warping and shading refinement modules. Even using the target resolution as inputs, [temporal anti-aliasing upscaling, or TAAU] generates lagging glossy reflections, while our [ExtraSS] has correct shadings.”

Right now, this is just a research paper, not a product. We’ll still need to see if Intel can apply the technique to its XeSS utility, but it seems like that’s where we’re headed. The paper cites four engineers from Intel, including Anton Kaplanyan, the original creator of Nvidia’s DLSS.

We currently expect Intel to reveal details about its next-gen Battlemage GPUs in the second half of 2024. It’s possible that we’ll see more details on this frame extrapolation technique around that time, but it’s too soon to say right now.

Editors’ Recommendations