Emerging startup Physical Intelligence has no interest in building robots. Instead, the team has something better in mind: powering the hardware with the continuously learning generalist ‘brains’ of AI software, so existing machines will be able to autonomously carry out a growing amount of tasks that require precise movements and dexterity – including housework.

Over the past year we’ve seen robot dogs dancing, even some equipped to shoot flames, as well as increasingly advanced humanoids and machines built for specialist roles on assembly lines. But we’re still waiting for our Rosey the Robot from The Jetsons.

But we may be there soon. San Francisco’s Physical Intelligence (Pi) has revealed its generalist AI model for robotics, which can empower existing machines to perform various tasks – in this case, getting the washing out of the dryer and folding clothes, delicately packing eggs into their container, grinding coffee beans and ‘bussing’ tables. It’s not a stretch to imagine that this system could see these mobile metal helpers rolling through the house, vacuuming, packing and unpacking the dishwasher, making the bed, looking in the refrigerator and pantry to catalog their contents and coming up with a plan for dinner – and, hey, why not, also cooking that dinner.

It’s with this vision that Pi reveals its “general-purpose robot foundational model” known as π0 (pi-zero).

At Physical Intelligence (π) our mission is to bring general-purpose AI into the physical world.

We’re excited to show the first step towards this mission – our first generalist model π₀ 🧠 🤖

Paper, blog, uncut videos: https://t.co/XZ4Luk8Dci pic.twitter.com/XHCu1xZJdq

— Physical Intelligence (@physical_int) October 31, 2024

“We believe this is a first step toward our long-term goal of developing artificial physical intelligence, so that users can simply ask robots to perform any task they want, just like they can ask large language models (LLMs) and chatbot assistants,” the company explains. “Like LLMs, our model is trained on broad and diverse data and can follow various text instructions. Unlike LLMs, it spans images, text, and actions and acquires physical intelligence by training on embodied experience from robots, learning to directly output low-level motor commands via a novel architecture. It can control a variety of different robots, and can either be prompted to carry out the desired task, or fine-tuned to specialize it to challenging application scenarios.”

In their research, pi-zero demonstrates how a variety of jobs requiring different levels of dexterity and movements can be performed by hardware trained by the AI. In total, the foundational model carried out 20 tasks, all requiring different skills and manipulations.

“Our goal in selecting these tasks is not to solve any particular application, but to start to provide our model with a general understanding of physical interactions – an initial foundation for physical intelligence,” the team notes.

π₀ is a VLA generalist:

– it performs dexterous tasks (laundry folding, table bussing and many others)

– transformer+flow matching combines benefits of VLM pre-training and continuous action chunks at 50Hz

– it’s pre-trained on a large π dataset spanning many form factors pic.twitter.com/zX9hvVdQuH— Physical Intelligence (@physical_int) October 31, 2024

Now, I’m the last person at New Atlas to get excited about robotics, largely because most of what we’ve seen have been specialist machines – and, to be honest, I’ve had my fill of humanoids moving boxes from point A to B. In biology, specialists are very good at exploiting one niche – for example bees, butterflies and the koala – and do it exceptionally well. That is, until external forces such as habitat loss or disease, reveals their limitations.

However, generalists – like a racoon or a grizzly bear – may not be as good at occupying one niche as others, but they’re far more adaptable to a wider range of habitats and food sources. Which ultimately makes them more suited to dynamic changes in the environment.

Similarly, generalist robots will be able to do more than expertly build a brick wall; and, capable of learning, they will be able to adapt to different challenges in the physical world and have a suite of ever-evolving skills.

Pi-zero uses internet-scale vision-language model (VLM) pre-training with flow matching to synchronize its movements with its AI learnings. Its pre-training included 10,000 hours of “dexterous manipulation data” from seven different robot configurations, as well as 68 tasks. This was in addition to existing robot manipulation datasets from OXE, DROID and Bridge.

We compare π₀ and π₀-small (non-VLM version) to a number of prior models:

– Octo and OpenVLA for 0-shot VLA

– ACT and Diffusion Policy for single taskIt outperforms zero-shot on seen tasks, fine-tuning to new tasks, and at following language pic.twitter.com/TUDsFjitDr

— Physical Intelligence (@physical_int) October 31, 2024

“Dexterous robot manipulation requires pi-zero to output motor commands at a high frequency, up to 50 times per second,” the team notes. “To provide this level of dexterity, we developed a novel method to augment pre-trained VLMs with continuous action outputs via flow matching, a variant of diffusion models. Starting from diverse robot data and a VLM pre-trained on Internet-scale data, we train our vision-language-action flow matching model, which we can then post-train on high-quality robot data to solve a range of downstream tasks.

“To our knowledge, this represents the largest pre-training mixture ever used for a robot manipulation model,” the researchers noted in their study.

While the company is still in its early days of research and development, Pi co-founder and CEO Karol Hausman – a scientist who previously worked on robotics at Google – believes its foundational model will overcome existing hurdles in the field of generalisation, including the amount of time and cost involved in training the hardware on physical world data in order to learn new tasks. The Pi team also includes co-founder Sergey Levine, who has pioneered robotics development at Stanford University and Brian Ichter, former research scientist at Google.

In 2023, satirist and architect Karl Sharro went viral with his tweet: “Humans doing the hard jobs on minimum wage while the robots write poetry and paint is not the future I wanted.” The same year, Hollywood ground to a halt as members of the Writers Guild of America went on strike, seeing the bleak path ahead for creatives in the face of this new age of technology.

And while AI may still be coming – and has already come – for many of our jobs (you don’t have to remind us journalists of that), Pi’s vision feels more in line with those of the mid-20th century futurists, who saw a world in which the machines made our lives easier. Call me naive, perhaps, but if a robot comes for my housework, it can take it.

You can see more videos of the drills the team put the pi-zero robots through on the Pi blog post, but here’s one that demonstrates its impressive – and delicate – work.

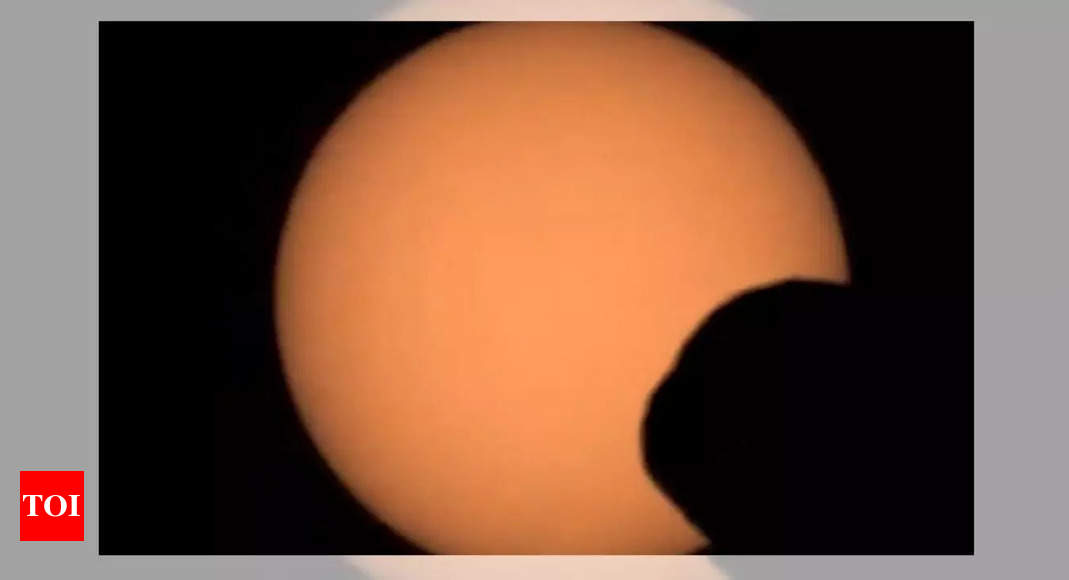

Sorting processed eggs

The research paper on pi-zero’s development and training can be found here.

Source: Physical Intelligence