Every facet of AI feels like it’s advanced by a decade in the last year, and in the whirlwind of new releases and capabilities, you may have missed something important: interactive video chatbots that can see, hear and converse with you in real time.

Look, don’t mind me, I’m over here still blown away by how good ChatGPT’s advanced voice mode is. Multimodal AIs are proving themselves every bit as capable at expressing themselves in audio and video form as they have in the written word.

A couple of years ago, the idea of having a video chat with a fairly lifelike, photorealistic AI would have seemed ridiculously futuristic. Yet here we are in 2024, I’ve just spoken to five of them, and I’m now so conditioned to immediately accepting new breakthrough technologies that it already feels completely normal.

The Good

Indeed, I’m about to complain about their current shortcomings. But first, let’s talk about what they do well. Speed, for me, has to be top of the list here. You speak, and the video avatar responds with no more lag or latency than you’d get with a voice chat – around 600 milliseconds, according to Tavus. Indeed, I’ve had plenty of video chats with other actual humans where there’s been more lag than this.

Of course, the avatars look and sound fantastic – and naturally, their conversational abilities make last-gen models like Siri and Alexa feel like black and white TVs.

Tavus

It’s truly stunning how much these models can do in real time – never mind the conversation, the voice, the body language – they can also look back at you through your laptop or device camera, taking stock of your surroundings and incorporating them into the conversation.

“I see you’ve got some guitars and keyboards behind you, Loz,” AI agent ‘Carter’ tells me. “And those sound absorbing panels on the roof … Looks like you’ve got a serious music production space there, loving those creative vibes!”

These agents can be given personalities, memories, scenarios, habits, tasks, boundaries, interaction goals, scripts, and access to whatever information they’ll need to do their job – jobs like automated sales, customer service, information assistants, whatever human-facing tasks can be done over a video chat interface.

They can converse comfortably in a range of languages, without losing the essential tone of their voices. They can appear in a range of different environments; walking down the street, driving a car, hanging out at a cafe, or sitting in whatever office you can dream up.

And they can look and sound just like you. A single two-minute video upload is all Tavus needs to capture your look and your voice, which it’ll then turn into a programmable “digital twin” conversation agent that’s your own spitting image.

We’re thrilled to introduce the world’s fastest Conversational Video Interface for developers.

Build rich, real-time video experiences with digital twins that can speak, see, and hear.

⏱️ Less than one second of latency

🤖 Realistic, intelligent digital twins

🔌 Plug and play… pic.twitter.com/KjKk2b6v68— Tavus (@heytavus) August 15, 2024

The Bad

These things are still very early versions of what’s coming steaming down the line at us. The Carter bot doesn’t always get its lips perfectly synchronized with its voice. The facial expressions aren’t always in the right places. He glitches a little; the eyes seem to reposition themselves on his head now and then, and the video or audio occasionally stutters to reveal his digital nature.

And, as with ChatGPT, the conversation is still a bit stunted. You need to take turns, and if you stop to think too long in the middle of a sentence, he’ll start replying when a human would (ideally) give you a little more space. AIs are yet to master the art of gentle interruption, prompting, these sorts of things.

It doesn’t matter. The speed at which this tech advances is truly staggering. In a few months Carter will be old news, and all these gaps will close rapidly. Most of the world only learned about ChatGPT last year – now you’re looking at the AI, and it’s looking at you, in real-time video conversations.

Tavus

The Ugly

Indeed, part of what this thing needs to do to improve is to become better at reading body language, which might help it work out, say, the difference between somebody trailing off, or pausing for thought, or having finished their sentence.

And then, of course, it needs to learn how to adjust its own body language in response to yours, and to advance its goals in the communication.

And here, for those of you that have followed my thoughts on AI over the last couple of years, you’ll start to see some of the scary potential here. Forgive me as we take off into the realm of speculation – but the rapid convergence of technologies in this space makes some things pretty clear to me.

Back in April, a study found that text-based AIs were already some 82% more persuasive than humans – and at the same time, we started seeing the first emotionally intelligent AI chat services, capable of reading the tone of your voice and responding to the emotional content as well as the words.

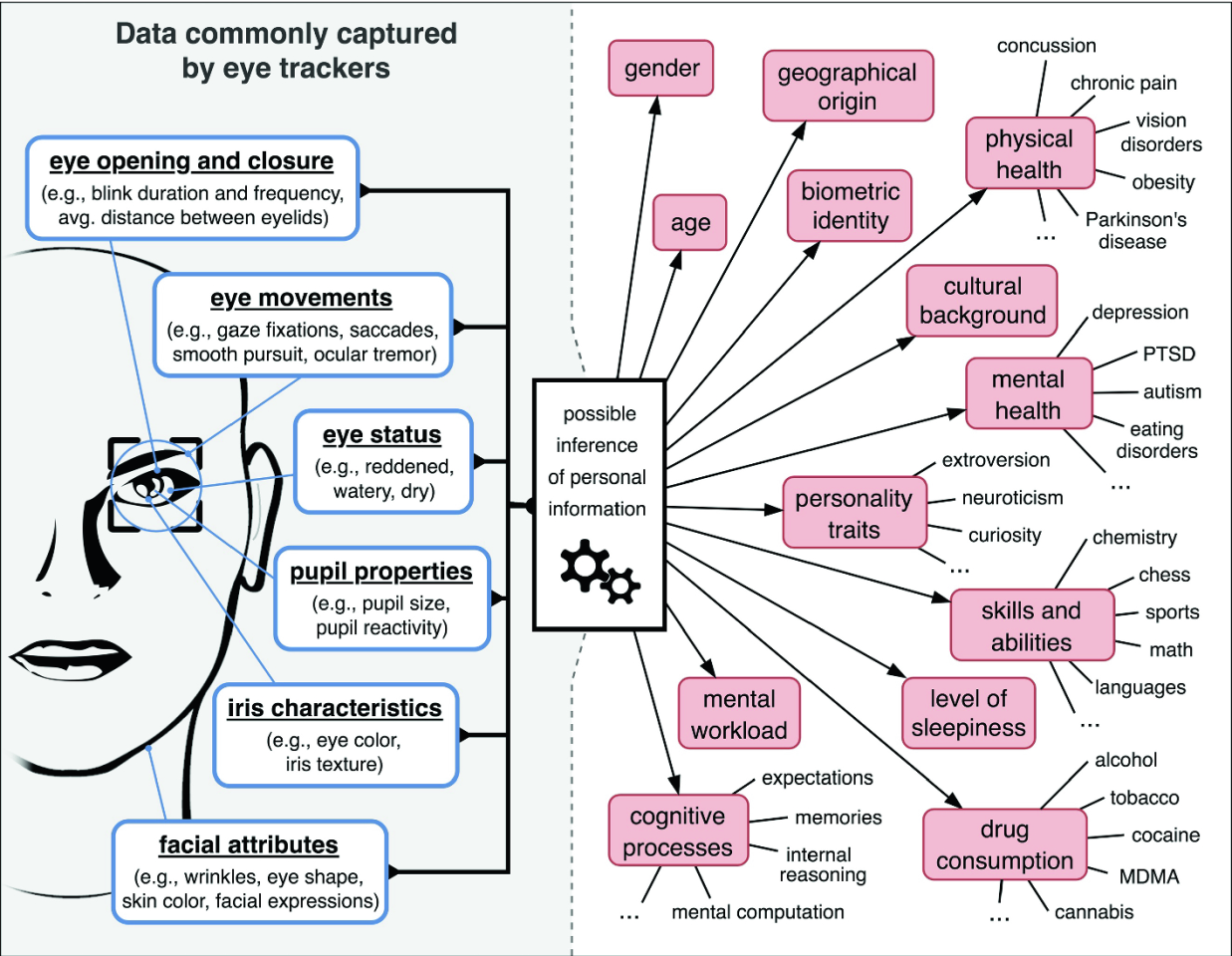

Oh, and here’s some light reading if you’re wondering how much an AI might be able to learn about you from your body language … Back in 2021, a research review absolutely floored me by outlining all the things AI could tell about a person just by tracking their eye movements.

So when I look at Carter looking back at me, I’m amazed by the progress and blown away by the technology, but I also see the embryonic form of the most powerful persuasive tool in history. This one might just beat religion, friends.

Given just a short scrap of video, a scammer could have an agent video-call you as your own mother, and cold-read you like no human expert ever could, constantly monitoring your facial expressions, tone of voice and body language to keep tabs on whether it’s fooling you or not. If you start to cotton on, it could notice almost before you do, and start deploying all sorts of distraction or refocusing techniques to bypass objections, create a sense of urgency, and move you toward its ultimate goal, whatever that is.

That’s just the criminal side of things … Imagine trying to get a refund when the customer service agent you’re talking to is a master conversational tactician, a superhuman body language expert and voice tone analyst all rolled into one. Imagine how powerful the sell’s going to be when you’re talking to a galaxy-brained super-salesman who can read you like a book.

That’s not to mention how effective these things will be as misinformation vectors, virtual girlfriends, divisive political tools … Perhaps even police detectives or interrogators. They’ll be incredibly believable one-on-one interactions, weaponizing our in-built physical tendencies to make our bodies betray us. The balance of power here will be incredibly one-sided, if they can just keep us on the line.

In a positive sense, they’ll become incredible therapists, doctors, assistants, coaches, mentors, trainers, teachers and probably friends. But it’ll be more important than ever to bear in mind the base truth: if you don’t own an AI, somebody else does, and it’s working for them first, and you second. So be very careful what you choose to reveal, and only deal with companies that you trust …

… or not. There might be no real way of protecting yourself from this stuff. We, as a species, might just have to adapt to a new reality.

You can have a two-minute demo chat with Carter yourself at the Tavus website. Tell him I said hi.

Oh, and you can take a look at what HeyGen is doing in this space as well if you want to see some similar alternatives, although I was less impressed by HeyGen’s demos.

Source: Tavus